Real-time Network Flow Generator

Overview

While working at j-sas we were building a software to detect network anomalies in real-time based off Machine Learning and AI models.

A general overview of the different components of the software can be seen below. The blue elements where the parts that I had been involved with:

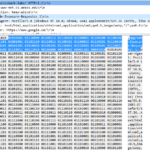

Capture Raw Binary Network Data: The very first stage involve collecting network data. The information entering our system would be in raw binary format. This information would be captured and processed in real-time.

Extract Network Information: In this stage the binary information captured in the previous stage is processed and converted to human readable values.

Higher Level Feature Generation: The information of the individual packets were later taken and combined to build higher level features that would be fed into the user dashboards and the ML models. These higher level features provided additional context as to what is happening on the network compared with individual packets.

User Dashboard: The user dashboard had various graphs and charts visualizing the different information that was retrieved from the ML models and the feature generators. In building the user dashboard I used angularjs and integrated with various front end libraries for displaying charts and graphs on the front end.

Challenges

Two of the main challenges working on this project are outlined below:

Working with binary data: The information that we would capture came in binary format. To extract meaningful information from this required strong knowledge of the binary structure of pcap files (https://tools.ietf.org/html/draft-gharris-opsawg-pcap-01), network communications and working with tools such as Wireshark. Miscalculating single byte could throw off the entire model

Working with high concurrency and large volumes of data in real-time: If you install Wireshark and capture packets on your computer for a few seconds, you might end up with 1000s of packets in just a small time frame. If you open youtube or some other streaming site, you can only imagine how much data will be captured. This project however was being built for networks of hundreds or even thousands of computers. These devices would all be constantly generating traffic. Capturing this information, parsing and building higher level features all had to be done in real time. What made this even more complex was a lot of the higher level features required aggregation of the packets, which would require storage methods to retrieve older packets. In order to be able to keep up with the flow of information in real time high levels of concurrency and parallelism was used in the source code that was written.

Skills

Java, Spring Boot, AngularJS, Mysql, MongoDb

Description

Developed software to extract real time information from network packets.

Skills

- Java, Spring Boot

- Angular JS

- Multi threading and high concurrency

- Working directly with binary data

- Mysql, MongoDb